- Runway unveils Gen-3 for AI-generated videos from text.

- Powerful controls over style, motion, filmmaking elements.

- Teases even more advanced future models coming.

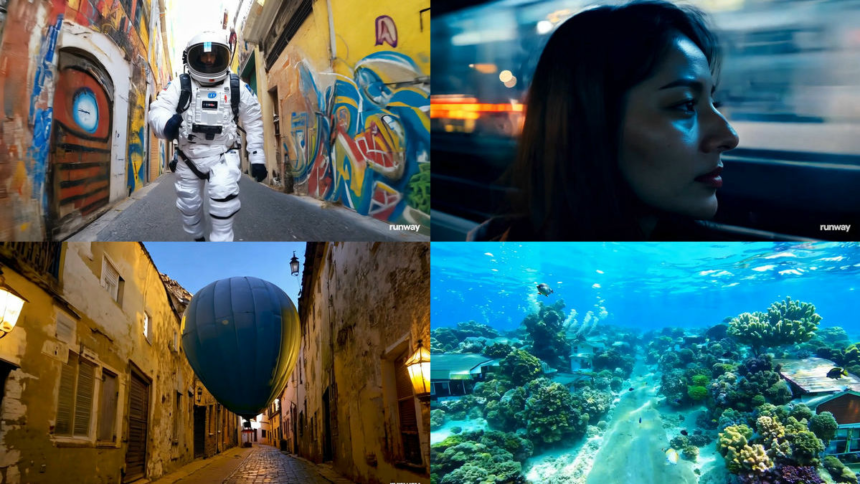

Runway, a pioneer in generative AI tools for filmmakers, has taken the wraps off Gen-3 Alpha – its latest AI model capable of generating captivating video clips from mere text descriptions and still images.

Hollywood, we have liftoff

Boasting lightning-fast generation speeds and unparalleled fidelity, Gen-3 promises to elevate the art of video creation to new heights.

This cutting-edge model empowers creators to exercise fine-grained control over the structure, style, and motion of the videos it generates.

From expressive human characters to cinematic transitions and precise keyframing, Gen-3 Alpha speaks the language of filmmakers fluently.

The future on fast forward

While Gen-3 Alpha currently maxes out at 10-second clips and grapples with complex interactions, this is just the beginning.

Runway co-founder Anastasis Germanidis teases a family of next-gen models trained on upgraded infrastructure, promising even greater capabilities on the horizon.